-

Posts

1,129 -

Joined

-

Last visited

-

Days Won

1

Content Type

Profiles

Forums

Gallery

Events

Blogs

Posts posted by Lee_P

-

-

3 hours ago, Magnum said:

I've just had a look at the single subs in both APP and MaximDL. APP has an autostretch like Pix Insight and also a neutralise background checkbox, if I leave that unchecked then the colours vary hugely, but with it checked they all become similar except for the gradients and vignetting differences, the D1 has the least vignetting and gradients, next is the D3, then P3 and finally no filter is the worst. So id say with whatever makes up the majority of your local LP I think the D1 is performing best, however you said that the conditions were worse on the night you used the D3, so maybe you can try that again, I would take a single sub with each filter on the same night just to eliminate the conditions as a variable. I prefer to evaluate filters with just single subs rather than stacks of several hours purely because then they can be taken all within 10 mins and identical conditions.

Ah, no can do -- I sold the D1 and returned the P3!

Thinking about gradients, I just checked the Moon phase on the night of each test:

No filter 43%

D1 76%

P3 85%

D3 Not visibleThanks for checking those subs. I think that the differences could likely be explained due to varying conditions on each night -- your approach of testing them all on the same night would be ideal, but alas isn't possible

-

1

1

-

-

11 hours ago, Magnum said:

Wow thanks for being the ginny pig and doing this test Lee, they are so close aren't they! I think No filter looks the worse and Possibly D1 looks to be picking the Ha jets on M82 the best. but as you say the conditions weren't favourable to the D3.

Id be interested in seeing a single sub from each to see which has the least noise in the background like you did at the top of the thread but including the D3.

Maybe you could also upload a single raw fits file for each filter in dropbox or something, so I could try and compare in MaximDL.

Lee

Yes, sounds good. My processing steps to produce these JPEGs could be smoothing out any differences, so it'd be good if you could run some tests too.

I've uploaded single subs and the three hour stacks here: https://drive.google.com/drive/folders/1sANwEglGFLrTOntqL5okqSLd32x82FBy?usp=sharing

Let us know how you get on!-

1

1

-

-

Following @Magnum's suggestion, I bought an IDAS LPS-D3 to test as well. Obviously this purchase resulted in weeks of cloud (sorry), but over the last two nights I managed to obtain three hours of data to make a comparison with No Filter, IDAS LPS-D1, and IDAS LPS-P3. Drumroll...

Hmmmm, hard to see much difference.

I ran the source subs for each stack through PixInsight to get a measure of seeing quality by calculating the average number of stars visible.

No filter: 876

IDAS LPS-D1: 1129

IDAS LPS-P3: 904

IDAS LPS-D3: 787From that I infer that the D3 had an uphill struggle due to poor sky conditions. (The seeing was noticeably worse when I was out under the stars setting up for the D3 test). Also, I do think that the colours the D3 gives look like the best of the bunch, but it's admittedly hard to tell in the examples above.

To be honest I'm not sure if the D3 is having a positive impact, or if I'm just making excuses to avoid returning another filter to FLO! What do you all think? 🤔

-

1

1

-

-

-

On 14/05/2021 at 20:14, Lee_P said:

Ok, I followed your thorough instructions with a few modifications that may have messed things up -- I saved as TIFFs so I could put them into Photoshop. Then once I'd adjusted the levels, I saved as 16bit so I could export as JPEG for the GIF. This is my result. I don't see the noise level going down appreciably, but I do see an increased gradient.

Here are the FITS files. If you've got the time, I'd be very interested to see my experiment done properly!

24.fit 3.04 MB · 2 downloads 22.fit 2.98 MB · 2 downloads 20.fit 2.93 MB · 2 downloads 18.fit 2.87 MB · 2 downloads 16.fit 2.82 MB · 2 downloads 14.fit 2.76 MB · 2 downloads 12.fit 2.71 MB · 2 downloads 10.fit 2.65 MB · 3 downloads 8.fit 2.6 MB · 2 downloads 6.fit 2.54 MB · 2 downloads 4.fit 2.49 MB · 2 downloads 2.fit 2.44 MB · 2 downloads

Thanks,

-Lee

Hi @vlaiv, have you been able to make a fair comparison with these FITS files? I'm interested to see the results when done properly, and not bodged like I've done 😅

-

5 hours ago, AstroMuni said:

Your image is beautiful btw. I am probably class 6 and I have street lights around 40ft away from where I park my scope.

You could check here: https://www.lightpollutionmap.info/

I'd have guessed you'd have darker skies than Bortle 6 to get that much detail in just 8 minutes!

-

6 hours ago, vlaiv said:

Sure, it is rather easy to do. There are several ways, but I'll show you easiest one.

- Either drag&drop your fits onto ImageJ bar (I do it below toolbar - onto status bar - but it will show words drag & drop as soon as you drag fits anywhere on that window) or go File / Open ... and select your fits

- make sure you are working with 32bit fits - if not, convert it to 32 bit (just click on menu option to switch data type - it is Image / Type menu)

- If your fits is color one - you'll get "stack" (this is ImageJ terminology - not actual astronomy stacked image we call stack) of three separate images - each channel) - move bottom scroll bar onto middle one (being green) and then right click anywhere on the image, you'll get menu:

Marked are scroll bar and menu option - duplicate. Once you hit that - it will ask you if you want to duplicate "stack" (several slices) or just one slice:

Leave "Duplicate stack" unchecked and this will create new image - copy of just green channel.

Close original stack of three channels as we will be working just on green for the time being.

- Hit Analyze / Measure menu option

You should get stats for your image and Median value should be there (it's not show on screen shot as it is far right but Mean value is shown).

If you by any chance don't have median in results - you need to customize your measurements. It is done via Analyze / Set measurements menu:

There are quite a few options that you can use - just check Median and make sure you have enough decimal places for precision.

Now you can repeat measurement via Analyze / Measure and you should have Median value included.

- Note down median value (in my case - it is 0.05964.... ) and do Process / Math / Subtract

- After that - you can do another measurement to confirm results:

Here we go - old and new value

- In the end - File / Save as / Fits ...

Repeat with other files ...

Ok, I followed your thorough instructions with a few modifications that may have messed things up -- I saved as TIFFs so I could put them into Photoshop. Then once I'd adjusted the levels, I saved as 16bit so I could export as JPEG for the GIF. This is my result. I don't see the noise level going down appreciably, but I do see an increased gradient.

Here are the FITS files. If you've got the time, I'd be very interested to see my experiment done properly!

24.fit22.fit20.fit18.fit16.fit14.fit12.fit10.fit8.fit6.fit4.fit2.fit

Thanks,

-Lee

-

1

1

-

-

1 hour ago, vlaiv said:

In that case - I have no idea

(don't own/use PI).

(don't own/use PI).

I can do it for you however, if you wish. I'm using ImageJ and it's really easy for me to do it. Just attach fits that you want normalized (like you did with 90 and 135 minute exposures) and I'll be happy to do it for you.

Alternatively - if you want to install ImageJ / Fiji - I'll be happy to show you how to do it in that software.

Thanks, that's very kind. I'm here to learn, so I'll glady take you up on the offer of showing me how to do it in ImageJ. I've downloaded the software. If I fail then I'll take you up on your offer of just doing it for me 😂

-

46 minutes ago, AstroNebulee said:

Have you tried stacking the subs with the darks just to see if there's any improvement, as you say the background noise should smooth out with more light frames taken. I know with my sw 72ed AzGti Canon 600D I don't take any darks atall now, just flats and bias as I find the darks just add more noise, maybe its just my set up but quite a few others not take darks. Here's a stack of a few of mine only 50 mins-1hr total subs. Not award winners by any means atall.

Nice pics! Back when I started with my camera I did some tests, and found that Darks + Flats gave me the best results. I might try again though, as I'm learning a lot about how to make proper comparisons.

-

1

1

-

-

41 minutes ago, AstroMuni said:

There is definitely something not quite right. I am no expert in this and I got this literally as my first image and using around 500secs worth of data with no darks or flats. So comparing mine and 24hrs of yours I would have said you should have got a much clearer image.

That's a great picture, especially for such a short integration time. The images in my test just had some very basic edits done, to allow them to be compared fairly. This is a fully edited version with 24 hours of integration time. Looks a bit better than my simple tests:

What level of light pollution do you have? I'm going to guess you're imaging from some nice dark skies? I'm in a city centre, which puts me at a big disadvantage with broadband targets like M81.

-

2

2

-

-

1 hour ago, vlaiv said:

It looks like the images are 32bit, but the statistics panel only has the option to display info for up to 16bit 🥴

-

9 hours ago, vlaiv said:

As simple fix - you can just "wipe" the background with simple process.

Using pixel math just subtract median value of each stack from it.

After doing that - all subs should have 0 median value and backgrounds should be normalized. There will still be some intensity mismatch due to atmospheric absorption on different altitudes of object during recording - but that is minor thing - you should get usable results from just setting median value across stacks to same value.

Ok, thanks, this makes sense to me. I tried it and something is amiss, as after my PixelMath subtraction, the median value isn't 0. Could someone versed in PixelMath point out where I'm going wrong?

Before PixelMath. Median value = 29.

My PixelMath expression to remove the median value:

After subtraction, the median value is 4. Shouldn't it be 0?

Further proof that I've got it wrong. The left half of the image is 2 hours of integration, the right half is 24 hours. These are after my evidently suspect process of subtracting median values!

-

1 hour ago, vlaiv said:

First thing you need to do is normalize frames.

They have to have the same background level and same star intensity. I think that PI should have this feature - but since I don't use PI - I have no idea how it's performed.

After that - when intensities are normalized - linear stretch is simple - you do same levels without messing with middle slider - just top and bottom - on all frames and you should get same linear stretch.

In fact - when frames are normalized - you don't have to do linear stretch - as long as you perform same levels / curves (can save that as preset) - you should get compatible result.

I've been trying for the last hour but just can't crack the normalisation stage. I'm sure it's simple, so I need to find some tutorials. I'll pause this for now, but thanks for all your help!

-

3 hours ago, vlaiv said:

Well, there won't be much visual difference between 90min and 135min. That is only 50% increase in number of frames or about 22.47% increase in SNR. That is on edge of perception.

However, it can be seen with a bit of "trickery". Human brain is very good at recognizing patterns - and if you give it two patterns side by side - it will spot similarity and differences rather easily.

here is animated git that I made from 90minute and 135minute fits - green channel, linearly stretched and employed "split screen" trick.

Right part of the frame does not change - it is 135minute fits. Left side alters between 90 and 135 minute fits.

It is obvious that 135minute looks a bit smoother - smaller grain and more uniform than 90 minute data. Even with such a small increase in data - difference can be seen if you know where to look for it.

No. Background extraction will remove very uniform component of background signal and leave background noise in place.

Here is analogy to help you understand better. Imagine that you have mountain trail full of rocks that you walk on. It's bumpy ride. That is noise. Background level is just difference between that path being on Mount Everest versus your local hill. Bumps in that trail won't change if you lower the mountain level.

Last and most important thing is - although size of bumps in the path does not change - their relative size to the mountain does change. Average size of rocks of 10cm on path compared to Mount Everest being 8848 or whatever meters - makes that almost one in 100,000, but if you "wipe" the mountain and leave local hill that is 100meters high - now rocks are 1 in 1000.

This makes no difference to the image - it just makes difference to processing parameters - where you put your black point. Depending on that - you'll get relative noise level - same image can appear quite noisy or very smooth - depending on how you process it. Here is 135 minute data in two stretch versions:

Same image - different background.

One of very important skills to learn in image processing is to push the data only to the point it allows to be pushed. It is SNR that is important. In my view - it is better to keep the noise at bay by sacrificing signal part - maybe you won't be able to show those faint tidal tails nicely or those outer parts of the galaxy will be too dark to your liking - but image will be smooth and with very little noise.

Not being able to show faint detail does not mean that you did not push your data enough - it means that you did not spend enough time imaging.

Thanks vlaiv, this is pure gold. I think I'm getting my head around it...

I tried reproducing your example. I find it useful, but did I mess up the "linear stretch" aspect? If you could give me a pointer on how to do that in Photoshop, maybe I could make a version 2.

And here's a graph of noise against integration time.

Thanks again!

-Lee

-

1 hour ago, Laurin Dave said:

Have you measured the different stacks in Subframe Selector? It looks to me as if the longer integration time stacks are getting harder stretches, maybe put the same stretch on each stack and then compare, also what's happening to M81 and M82 are they gettng better?

Oh, you're clever 😎

Looks like the noise level is going down?

Here's the noise level over 24 hours of data:

So thanks to this and @vlaiv's insight, I think I understand that the noise level *is* going down, it just doesn't appear to in my GIFs because the process I used was automatically stretching it. BUT! I still don't understand why the noise pattern is the same in every image. Is that because of the automatic stretching?

To answer your other question, here's M81 over 24 hours:

And a version of the same data adjusted in Photoshop to keep the galaxy's brightness consistent:

-

2 hours ago, Seelive said:

Last year I made some (rough) measurements of the effect of different stacking methods (I use DSS). I generally try to use at least 80 lights (180s each, so 4 hrs total). I found that using Average stacking the resulting noise closely followed the 1 / ROOT(N) formula and similarly for Kappa-Sigma stacking, but only up to about 30 lights. After that the resulting noise leveled off and occasionally appeared to increase slightly. These days I tend to Kappa-Sigma stack the lights in seperate batches of 20 to 30 and then average stack the results. It seems to give me the same resulting noise as average stacking does but without the satellite trails and hot pixels.

Ok, interesting. Could be worth me investigating. Thanks!

-

2 hours ago, scotty38 said:

I cannot really add much apart from to say I have noticed ABE introduces noise similar to what you see here and, as yet, I've not been able to work out what settings I need to change or if it's my data. APP can make a better job of the stacking using its defaults. Having said that I know there are enough folk getting great results out of PI that I have basically accepted it's me/my data at fault.

I can see the screen shots but are you using WBPP and have you looked at Adam Block's videos for V2 of the script as there may be info in there that could help - I've not watched them all yet btw....

Edit just to be clear and what I mean re ABE adding noise is that if I use STF on a stacked image and then apply ABE the ABE version has noise whereas the STF image does not.

Interesting, I thought that ABE / DBE appears to introduce noise because they're removing gradients (e.g. from light pollution) that are essentially masking the underlying noise. If that makes sense? I could be wrong!

I am indeed making my way through Adam Block's WBPP2.0 videos -- it's those that prompted me to make the tests including FlatDarks and CosmeticCorrection!

-

2

2

-

-

15 minutes ago, vlaiv said:

Can you post just couple of linear stacks without stretching them (as fits please as I don't have PI and can't open xifs)?

Visual feedback on how much noise there is - depends on stretch level. I think that you used PI automatic stretch - and that one works on basis of noise floor - this is why it stretched each sub so that noise in background is the same.

You can see that by size of stars in your animations.

there is much more "light halo" around left star then on the right - but they are the same star (you can see this in your animated gifs - just watch any single star and see it "bloat" as total exposure increases).

In order to measure noise accurately - take two stacks still linear and select piece of background not containing any signal - no stars, no objects, mostly empty and do stats on each image in that region.

Compare pixel standard deviation values between images.

If you want to visually asses difference between two images - make sure they are normalized (one with respect to another) - or that they are stacked against same reference frame. Then do "split screen" type image while you still have linear data - prior to any stretch. This is easily done by selecting half of one stack and pasting it in the same place onto another stack.

Only when you have such image - do the stretch to show background noise. This way you are guaranteed to have same stretch on both images - as you'll be doing stretch on single image consisting out of two halves of different stacks.

Thanks vlaiv, I'm going to read all that carefully -- for now, attached are four FITS stacks 😃

-

FYI I've conducted a few more tests and have started a new thread about this:

-

Hi SGL Hive Mind,

I’ve got a real head-scratcher of a problem, and I’m hoping someone here can help me solve it. I’ve been experimenting with seeing the effects of increasing integration time on background noise levels. My understanding is that the greater the total integration time, the smoother the background noise should appear. But I’m finding that beyond one hour of integration, my noise levels see no improvement, and even maintain the same general structure.

I flagged this in another thread but think it deserves its own thread, so I thought I’d begin anew.

I figure either my understanding of integration and noise is incorrect, or maybe I’ve messed up something in pre-processing. I’ve conducted a lot of tests with different settings, copied below, but nothing seems to make much difference. I’ve uploaded my data to GDrive, in case anyone’s feeling generous with their time, and would care to see if they get the repeated noise pattern! (Being GDrive, I think you need to be logged into a Google account to access).

My telescope is an Askar FRA400, and the camera is a 2600MC-Pro. All a series of 120-second images shot from Bortle 8 skies. For each test, I applied some basic functions in PixInsight just to get images to compare: ABE, ColorCalibration, EZ Stretch, Rescale to 1000px. I used SCNR to remove green from the first tests, but forgot that step for the second batch.

Any idea what's going on? Why isn't the noise smoothing out past the one hour mark?

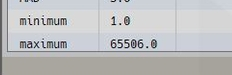

Here are my PixInsight ImageIntegration settings:

-

1 hour ago, CloudMagnet said:

Ill be honest, I cant comment on PixInsight. I just stick to good old DSS. The settings dont look like they would cause any problems at first glance. Could try in DSS and if you get the same we can rule out pre-processing as the cause.

I've tried using DSS but can't get a decent image out of it, so obviously I'm doing something wrong. Why is nothing ever easy?! 😅

-

1

1

-

-

26 minutes ago, CloudMagnet said:

Seem to be having a problem downloading them, think its my computer doesnt like it

I dont think it would be user error anyway, what program and settings are you using for stacking?

You might need to be signed into a Google account? https://drive.google.com/drive/folders/1simtZOsVAgwuqIT44uMkJ_a_jzGvhE3n?usp=sharing

I used PixInsight. I'm a relative newbie, so wouldn't be surprised if I were doing something dumb that's causing the effect. Here are my settings:

-

@CloudMagnet @Ouroboros @ Anyone Else That's Interested

Could we try ruling out user error? Seems that me having messed up the pre-processing or integrations is a possible cause for the noise pattern. I've uploaded the files from the latest test here: https://drive.google.com/drive/folders/1simtZOsVAgwuqIT44uMkJ_a_jzGvhE3n?usp=sharing Any chance you could try some integrations and see if you get the same effect? No worries if that's too much hassle for you!FYI the Lights folder contains the 10px drizzled data. The file that begins "REF" is what I used for my reference file. If you wanted to reproduce the exact field of view of my tests, the location ("Region of Interest") in PixInsight) is:

Left: 2973

Top: 1756

Width: 400

Height: 400I then Rescaled to 1000px x 1000px to make it easier to see.

What are the odds that we'll see that same noise pattern again? 🤔

-

1 hour ago, Ouroboros said:

@Lee_P Mmmmmm ... The background noise doesn’t get much better with increasing dither does it? I doubt the graininess is down to your Bortle 8 skies though. Light pollution wouldn’t have that much structural detail in it would it? It would just be a slowly varying background fog across the skies surely.

Those variations in background structure are consistent too. They get more noticeable the more frames you average. So they’re either inherent variations in the sensitivity of your image sensor or they are in deep space aren’t they?

I wonder whether 10 pixels dither on your image camera is enough. Or is that 10 pixels on your guide cam, which I think works out at 30+ pixels on your image camera (looking at your system specs)?

I also wonder how you’re stretching the images. If you’re using automatic stretch is this somehow making the background noise appearing to not get much better as you average more frames?

I reckon it's 10 pixels on the guidecam. That's the highest possible setting, so I'd be surprised if it weren't enough.

Re: stretching, I used EZ Stretch in Pixinsight.

🤔🤔🤔

-

1

1

-

IDAS LPS-P3 Light Pollution Suppression Filter

in Member Equipment Reviews

Posted

That's the question -- is the D3 worth having? I'm not sure it's benefiting the images appreciably enough over no filter to justify its £184 cost. *Maybe* its images look a little bit better if you zoom in a lot and squint 😅

Happy to have run these tests anyway! My main message to anyone else reading this thread wondering about light pollution filters would be that their effectiveness is very much dependent on your local sky conditions. So don't rely on reviews from other people, get hold of one and test it!