-

Posts

31,960 -

Joined

-

Last visited

-

Days Won

182

Content Type

Profiles

Forums

Gallery

Events

Blogs

Posts posted by JamesF

-

-

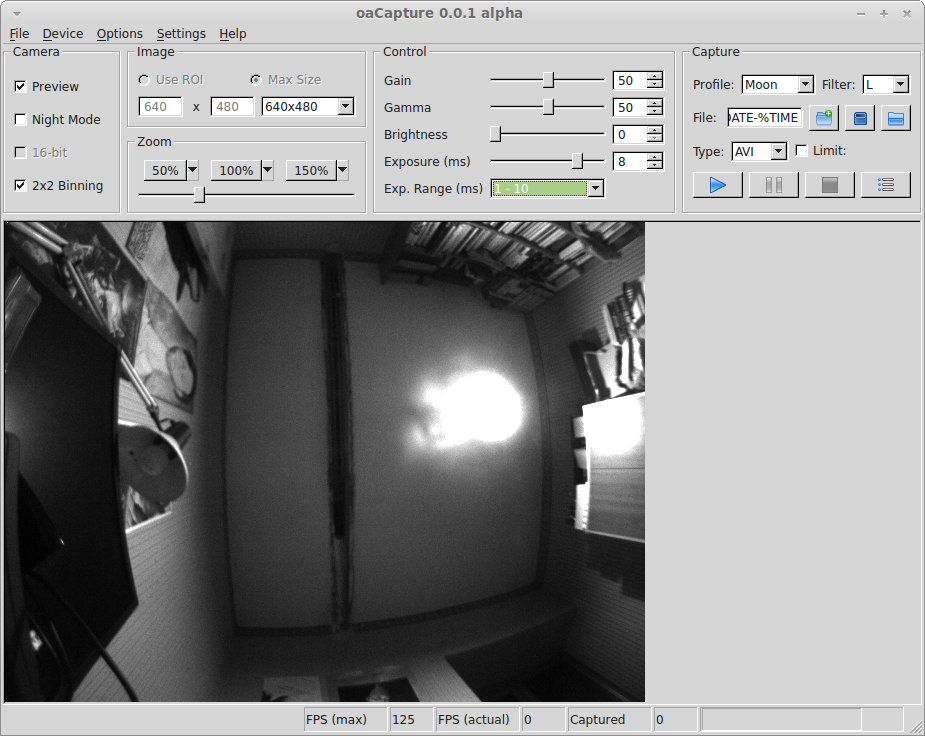

And here we have 1280x960 mono with 2x binning to 640x480

James

-

I've just been having a look at all the examples and documentation that comes with the Point Grey SDK. I think I almost prefer none

Definitely suffering from information overload now. I think I'll do the QHY cameras next

Definitely suffering from information overload now. I think I'll do the QHY cameras next

James

-

I suspect that "having to ask permission" is probably not going to work very well from the point of view of open source. I shall ponder on that one.

James

-

It's a tricky one, certainly. Normal RGB is great to work with from a capture point of view because you don't need to do much with it, but it doesn't compress very easily. Splitting the RGB into colour planes would make it easier to compress because of the increased coherence, at the expense of more computation. And then there are cameras that can only do YUV or somesuch. It would be nice to cope with those, but expanding the data to RGB to sort it in a file isn't ideal.

However, I guess we're not really desperately interested in getting the absolute best possible compression, are we? Merely reducing the data volume to a more manageable level. That would allow some compromises to be made. The requirements for storing capture data are not the same as those for storing hours worth of television or film video in a usable format.

Perhaps the path of least resistance would be to expand SER to store RGB data in three colour planes and use one of the existing codecs (or something derived from one) to compress the colour planes.

James

-

Hmmm. I'm not sure I have a camera that supports raw colour output. I know the SPC900 will if you change the firmware, but I don't really want to do that right now. I'll have to check to see if the 120MC will. I need a goal for tomorrow anyhow. The 120MM works fine now

I'd still like to find a decent non-lossy and widely supported codec that can handle 8-bit and 16-bit greyscale video though. At the moment I'm writing the data raw and whilst it's smaller than the RGB equivalent, I'm sure it could be better.

I'd still like to find a decent non-lossy and widely supported codec that can handle 8-bit and 16-bit greyscale video though. At the moment I'm writing the data raw and whilst it's smaller than the RGB equivalent, I'm sure it could be better.James

-

Sounds like you are charging ahead with your project James, good stuff.

It's quite unexpected in a way. I've spent most of the last year or so writing code for a single project and it's quite hard to bring myself to sit down at a computer and write even more code after finishing each day, but having started this I'm really very much enjoying it. It's perhaps not the tightest code I've ever written, but I have learnt a fair bit about Qt, video formats, the ffmpeg library, threads and got myself back into a bit of C++ programming, so I really can't complain there.

To top it all I plugged in my 120MM a moment ago and it "just worked" even without monochrome support. I couldn't work out why until I discovered that the mono camera driver actually supports RGB24 as well

I'm going to force it into mono mode and make that work anyhow, because I'll surely need that for other cameras along the way and it would be nice to keep the output file sizes down where possible.

I'm going to force it into mono mode and make that work anyhow, because I'll surely need that for other cameras along the way and it would be nice to keep the output file sizes down where possible.James

-

That was easily done then

I shall start work on capturing in mono later this evening. I've not done anything about that yet.

James

-

No it does not accept BGR24. I just used a for loop to swap the pixels around, I found that easier than figuring out the swscale library!

Chris

By strange coincidence I had barely two minutes ago come to the same decision

James

-

Ah, well, there's always just one more gotcha

The Ut Video codec appears not to want to play if I tell it the file format is BGR24 rather than RGB24.

The Ut Video codec appears not to want to play if I tell it the file format is BGR24 rather than RGB24.Fortunately I believe the ffmpeg swscale library can convert from the former to the latter. I just have to work out how to use it.

James

-

Well, having put the camera to one side for a few hours to allow me to get on with some work, I've returned to it this evening and having basically decided that setting the USB bandwidth control variable to 40 is the only option for getting things working I now have functioning capture with the 120MC

I found something bright red and something bright blue and verified that the blue and red channels did appear to be swapped over. Fortunately there's a Qt function that does the swap. Now I just need to sort out writing an AVI file in BGR format rather than RGB. I don't think that should be too hard as long as the Ut video codec is happy about it.

I also still need to sort out the binning and there is a 12-bit mode that may not be supported outside the ASCOM driver, but I shall come back to the first after getting the 120MM to work and worry about 12-bit later I think.

James

-

Aha! Set the control to 40 and I get an absolutely rock-solid image at 1280x960. That's a step forward. Now I just need to sort out why the red and blue channels appear to be transposed and why the ROI control still isn't working properly.

Shame I ought to be working really, but this is far more interesting

James

-

2

2

-

-

Well, setting the value to 100 doesn't appear to do any good

That just causes the library not to return to my code at all.

That just causes the library not to return to my code at all.

James

-

I've seen very similar results from my QHY5L-II in one of the firecapture betas, though it was solved by reseting the camera - perhaps more to do with the timing issues at a hardware level.

That does seem consistent with what ZWO are telling me. I have an inkling that I might sometimes have seen it with the ASI120 in FireCapture too, though not often.

Either the Point Grey Firefly or QHY cameras are next on my list to get working, so we shall see what happens there. I need to get monochrome capture working before going too much further. I've not tried that at all yet.

James

-

ZWO are suggesting that it happens if there's insufficient bandwidth on the USB bus and that there's a control for alleviating the problem I can use, but I have no documentation for it at all as yet

I've picked the shared library apart a little (without access to the source) and it appears there's a separate execution thread that manages the camera and drops data into a buffer for the caller. I suspect that there may be synchronisation problems if the USB side can't keep up. Personally if

I've picked the shared library apart a little (without access to the source) and it appears there's a separate execution thread that manages the camera and drops data into a buffer for the caller. I suspect that there may be synchronisation problems if the USB side can't keep up. Personally ifI didn't have a new frame ready I'd probably arrange for the user to get the same frame twice rather than give them a damaged frame without flagging some error, but I guess that's a design choice...

For the moment it might be a case of just tinkering with the controls to see what happens. I've read the limits for the control from the library which says it should range from 40 to 100, but that the value set in the camera from cold start is 1. That doesn't bode well. I'll have to check that the camera default settings are in range and sort them out before starting a capture run I think.

James

-

Oh, also the fact that I get part of the raw colour data. I don't at any point have direct access to the raw data.

James

-

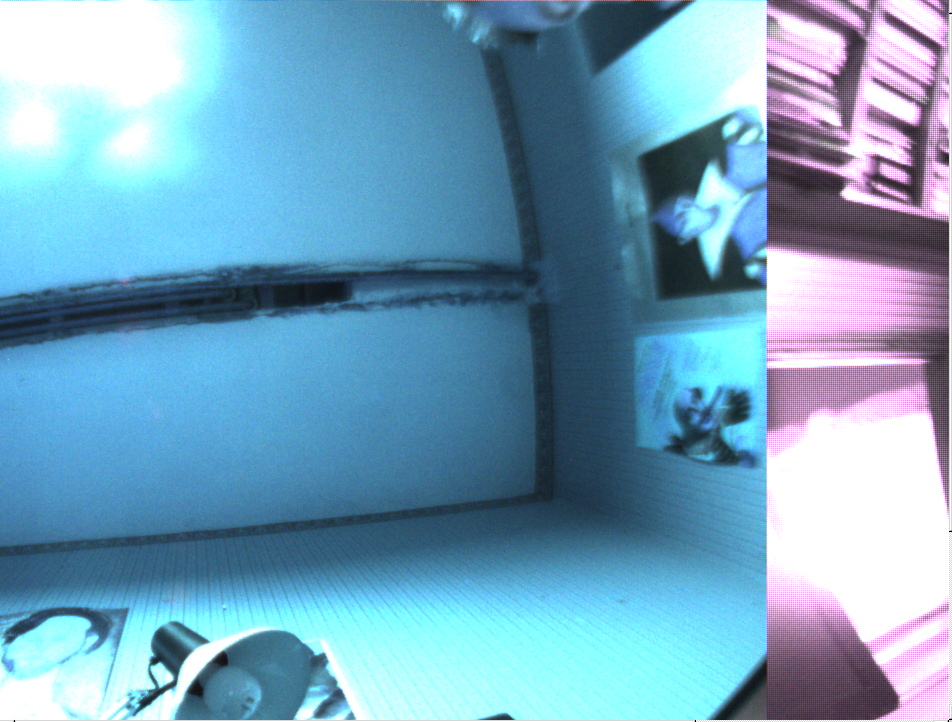

Here's an example of the problem, this time from my capture program:

I think the fact that this happens in both my capture code and in their demo program which share no common code above the C/C++ and USB libraries strongly suggests to me that the likely cause is their library.

James

-

Well, I'm still no further forward

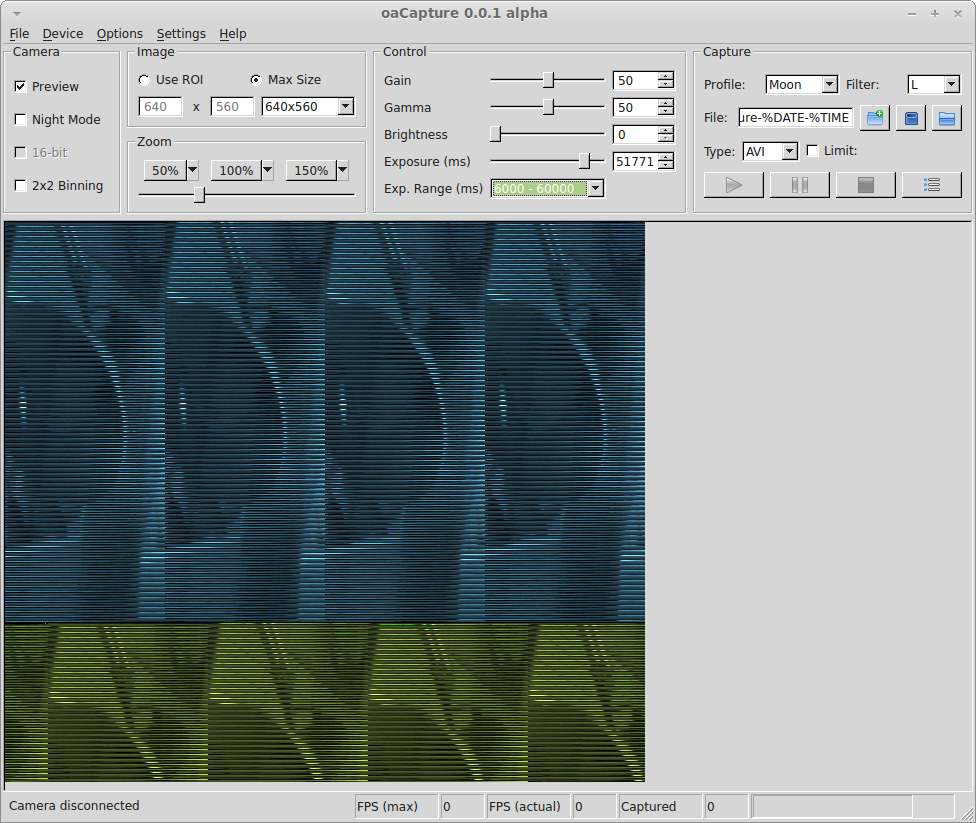

I'm actually having my doubts about the reliability of the ASI library at the moment. I built their demo program, which basically just grabs a frame and throws it on the screen repeatedly. At times it "jumps" and I see an image that has, say, the left-hand two thirds apparently not debayered, whilst the right hand third is debayered, but is actually the part of the image that should be on the left-hand side of the image. It looks a bit as though the buffers for the debayered and raw images are getting overlapped for some reason, or the data for the image isn't being copied from the correct place.

James

-

I now have an inkling that the frame returned by the SDK library is a plane of red, then green, then blue whereas everywhere else I'm using 8 bits red, 8 bits green and 8 bits blue, repeated over and over again. The image I'm getting at the moment looks like this:

I'll reorder the data and see if that helps.

James

-

A quick tinker over lunchtime and I have the window resize on rescaling problem fixed.

I think I've also tracked down the problem with changing the ROI though it's going to take a little longer to fix. I discovered that some V4L2 cameras need partially restarting when the resolution is changed and added functionality to do that. It appears that's not enough for the ASI camera though. Looks like I need to completely disconnect from the camera and reinitialise it from scratch otherwise it dumps core in the library's debayering code

I'm not entirely sure that all the frames are being returned correctly either. There's no demo code for anything other than 8-bit monochrome and next to no documentation, so I may be doing something wrong. Further investigation required, but it looks like it could be related to debayering too.

James

-

Very nice

I like the UI

I like the UI

Thank you. I have to admit to pinching some ideas from FireCapture for the UI, but imitation is the sincerest form of flattery

. My plan is eventually to allow the controls to be either at the top or the side. I think with a netbook it might fit better on the screen if the controls are alongside rather than above the preview window.

. My plan is eventually to allow the controls to be either at the top or the side. I think with a netbook it might fit better on the screen if the controls are alongside rather than above the preview window.James

-

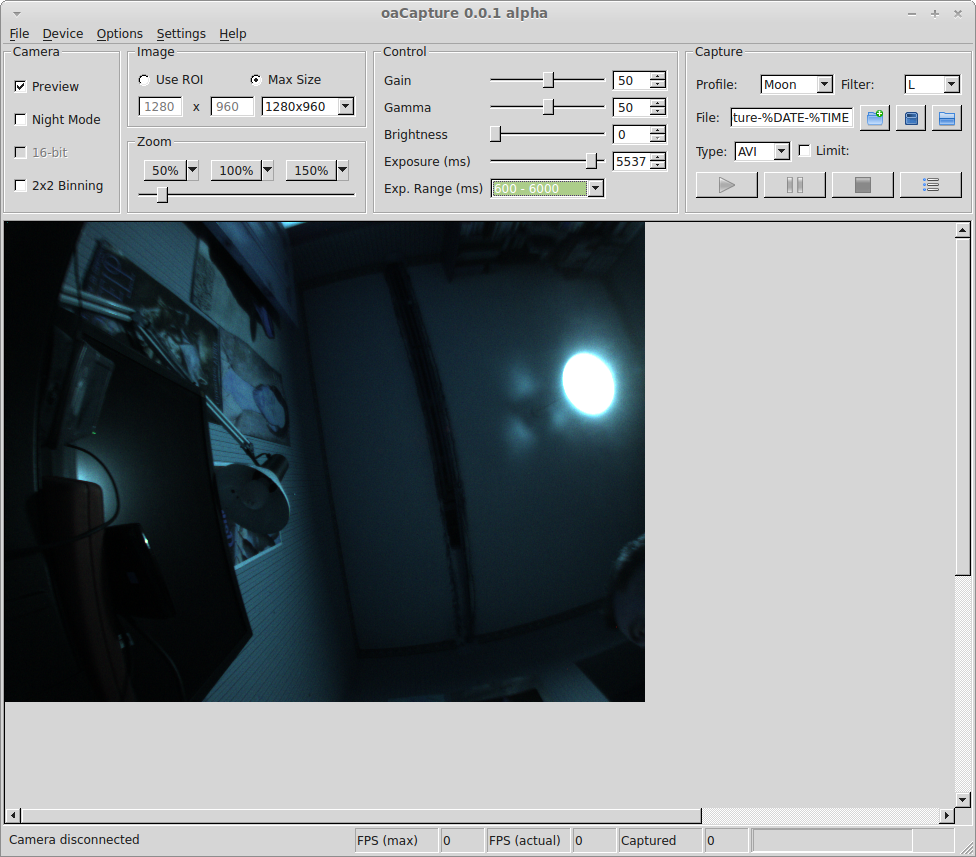

Laydeez an gennelmun! I present to you a screenshot of data captured from my ASI120MC

This is using the 2.1mm lens that was originally supplied with it.

I still have some way to go with support for this camera, but given that the only documentation is a few comments in the header file for ZWO's library and that I've probably only put five or six hours into integrating it with my existing application I'm feeling rather pleased with myself right now. I'm actually surprised how relatively easy it's been given that I didn't really look at the ZW API in detail before I started coding the application. Hopefully that's a reasonable indication that I have pretty much the right design for the interface.

Of the bits still to do, I particularly need to sort out changing the ROI and look into the exposure times as the limits for that control returned by the library look quite odd. I always intended to have a separate pop-up window that exposed all the available camera controls rather than just those that are probably immediately useful for imaging and I think I might need to bring that forward as there's a very blue cast to the image that can probably be fixed using the blue and red balance controls.

I also need to think about how I'm going to hook the binning into resolution changes, but that's lower priority.

Oh, and there's clearly a bug there the preview pane doesn't get resized when I rescale the image to be smaller so the scroll bars are left in place. I shall have to fix that (the image from the camera is showing scaled to 50% in this capture).

James

-

3

3

-

-

It might be useful to look at that one day, but for the purposes of getting the application working in the first place I'm not going to worry about it right now. I hand the bitmap to Qt and let it decide how best to deal with it and that's been ok thus far. If necessary it's an area I can look at optimising in the future.

My main concern regarding performance right now is if I should have a separate thread for writing the data to disk. I can see some justification for that, but again it's not been a major performance issue so far (and I have been doing some testing on an old Aspire One netbook), so I think time is better spent on getting a usable application rather than tuning something that may not actually need tuning.

James

-

Been working on ASI support since it turned cloudy this evening.

It's just occurred to me however that I also need support for a histogram before I can really use the application for imaging. I shall see if I can get that done as well.

James

-

Actually there are one or two tricky problems to solve, though they're more UI issues than application logic ones.

Some cameras appear to allow for arbitrary ROIs to be set. The ASI120 allows ROIs, but only a fixed set of sizes. Other cameras allow different resolutions to be used, but still show the full field of view (a bit like binning, I guess). Combining all of those into a tidy yet meaningful presentation in the UI is going to need some thought.

The camera also support 2x binning, but only at a single frame size. That is, you can't set an ROI and use binning on that. Which means the binning option will have to interact with frame size selection. I'd not considered the need for that before. Hardly the end of the world though.

James

Capture software for Linux

in Discussions - Software

Posted

Thank you, Alan.

After I got the binning done last night I tidied up a few other bits such as disabling binning for colour (I'm not really sure I understand what that might mean in real terms), disabling SER output for colour (not that SER output is even implemented yet) and displaying the current FPS and camera temperature.

I then got a bit stuck trying to decide what to do next.

I want to have a fairly rigorous clean-up of the code and put together some sort of build system before releasing the source. Some of it is a bit chaotic because I wasn't sure where I was going when I started (I didn't even own at least one of the cameras when I started That doesn't however prevent people using a binary release should they wish, so I can perhaps delay doing that work a little.

That doesn't however prevent people using a binary release should they wish, so I can perhaps delay doing that work a little.

I really would like it to support at least some of the Point Grey, QHY and TIS cameras fairly early on. I have a Firefly MV and at first sight the PGR documentation and SDK looks very comprehensive, but I can't help thinking there probably aren't as many Point Grey users out there as there are QHY and TIS. I have a QHY camera (a couple now, in fact), but whilst the alleged Linux SDK is open source it is also completely undocumented and what comments there are in the code appear to be in Chinese. I think it only supports a subset of the cameras, too. There are other open source projects that support some of the "missing" cameras, but again it's code-only and I'd need to spend some time just getting my head around all the camera-side code rather than working on the application. I don't have a TIS camera at all. I was tempted to bid on a DMK21AU04 on ebay last week, but decided if I'm going to get one I'd rather have the 618 in preference to what is pretty much an SPC900 in different clothes. At least then I might be able to run some comparisons between the TIS camera and the ASI120. I might look for a reasonably-priced second hand one that I can sell on at a later date. It would also be quite nice to work out why the SPC900 works on some releases and not others and fix that somehow.

On the other hand, if I write a bit of documentation, get a working histogram display and perhaps implement a reticle for the preview window and rip out or disable the UI for the bits I've not done yet then it's probably a usable capture application that supports the SPC900, a range of other webcams including the Lifecams (and probably the Xbox cam given a bit of tinkering with the driver) and both the ASI120 colour and mono cameras as well as perhaps the ASI130. I could get it out there and get some feedback whilst working on the other bits. I'm quite tempted by this. I'm quite happy to give the source to anyone who wants it at the same time on the understanding that it has sharp edges and it's not my fault if they hurt themselves whilst they're trying to make it work

I'm a little apprehensive about the aftermath of releasing it, I have to admit Last time I did anything similar there were probably only two different Linux distributions that were both installed from a stack of 3.5" floppies, any potential user could be relied upon to be in possession of a significant amount of clue and everything was far simpler. These days you've no idea what you're letting yourself in for

Last time I did anything similar there were probably only two different Linux distributions that were both installed from a stack of 3.5" floppies, any potential user could be relied upon to be in possession of a significant amount of clue and everything was far simpler. These days you've no idea what you're letting yourself in for

James