-

Posts

31,960 -

Joined

-

Last visited

-

Days Won

182

Content Type

Profiles

Forums

Gallery

Events

Blogs

Posts posted by JamesF

-

-

Thanks to everyone who has offered feedback thus far. I've been thinking about another alpha release this evening and am coming to the conclusion that it should include at least:

finishing as much as possible of the functionality in the existing UI -- everything except handling filters, I suspect

restructuring the bits of the code that I'd have done better if I'd know more when I started

and optionally:

Support for the QHY5

Support for those cameras supported by the QHY open source Linux SDK (most importantly for me, the QHY5L-II, but others too)

Support for the TIS cameras, or at least the DxK21s, assuming I can get hold of them to test and any information about making them work

Anything anyone else feels is desperately needed as soon as possible?

James

-

You're very welcome

James

-

Thank you so much James. So without the added riser the only way the telrad could fit if it was quite a bit more over to the right, it would clear the rim and be raised enough without one ?, but not sure I'd like that anyway for comfort, so to me looks the riser is pretty much a must and something else I'd have to get,

The problem is that "a bit more over to the right" is the end of one of the spider arms, and the base would then foul the nut retaining that, so it has to go "a fair bit more over to the right".

James

-

1

1

-

-

Here's the photo of mine:

Without the riser the telrad can't go where the optical finder is because it fouls the cage ring on the other side of the truss. It won't fit where I have it for exactly the same reason. Adding the 2" riser lifts the body clear of the cage ring and it would fit either side of the truss pole fitting, but I like to have the optical finder too so that's why I have the arrangement I do.

I'm afraid I can't comment on the balance however. As you can see from that photo mine isn't exactly standard, mostly because I obtained it in a number of different pieces from different sources, and I fitted it to my own base:

And I've messed up the balance even more since I reassembled it by adding a Moonlite focuser. The telrad is very light however, so if you have reasonable balance already I don't think it will be affected too much. And in honesty, if it did make the top a little heavy it's such an incredibly useful thing to have that I'd just add a counterweight to the bottom end rather than not have one.

The position I have my telrad in does mean I have to "lean over" the OTA slightly to use it, but I've really not found that to be a problem at all.

James

-

2

2

-

-

It's a really awkward fit with the standard baseplate alone. It will fit, but perhaps not in the most convenient place. The problem is that the back of the telrad body fouls the lower ring of the upper cage where it widens to take the truss, and it isn't possible to move it "just a bit" further round because then the baseplate fouls one of the spider nuts (make your own joke up there; I was spoilt for choice).

With a 2" or 4" extension the telrad body is lifted out of the way of the ring and there's no problem at all.

if you'd like a photo, let me know and I'll drag mine out so you can see how it fits.

James

-

Though it does seem to me that you have taken on a long term, full time project with this one...

Sometimes you just have to throw yourself off and trust that it will all work out ok in the end

But wait no longer. I have started a new thread so the topic is more relevant:

http://stargazerslounge.com/topic/197489-alpha-release-of-capture-application-for-linux/

James

-

Actually, it seems that both 12.04 and 11.10 (which I am now installing whilst downloading Fedora 19) are able to operate the SPC900 because they offer me the opportunity to crack the monitor by taking a picture of myself to go with my username, and display what is currently visible from the camera quite happily. Perhaps I need to look into this a little further. Could be that support for the SPC900 in earlier kernels is merely a case of tweaking a few things rather than wholesale rewriting.

James

-

Ubuntu 12.04 just finished installing and I've patched it fully. The application works, but the SPC900 code throws a wobbler. It appears that between the v3.2 kernel that is in Ubuntu 12.04 and the v3.5 kernel that is in Mint 14 (and presumably therefore Ubuntu 12.10) the driver was fixed. I shall have to ponder on the need for a userspace SPC900 driver for the earlier kernel. If I ponder long enough with any luck there'll be no requirement for one

James

-

I now have access to the QHY developer board. It's a bit, err, chaotic, but I now need to spend my time reading through it a bit.

James

-

I've joined the QHY forums and asked for access to their developer area. We'll see what comes of that. I suspect their focus is away from supporting the older cameras now, especially the ones that are no longer in production, but there must be an awful lot of them out there so it would be nice to support them in my code.

The SDK for the ASI cameras is basic and not well documented, but functional. I don't like the fact that it trashes frames if the USB bandwidth control is set too high and I'm inclined to believe that could be entirely dealt with in software, but without seeing the code I don't know and they're far from the only cameras to have the same issue. It seems to work fine now I have it configured suitably anyhow.

The Point Grey SDK does indeed look comprehensive. Almost to the point of being overwhelming. I think they are to be applauded for that.

I can't help wondering though... So many of these companies claim "trade secrets", "giving away competetive advantage" or other such guff when asked about releasing the source for their drivers. For the most part I reckon that's a load of cobblers. Many of the cameras are built around well-known chipsets. Anyone who is reasonably competent (enough to write their own driver, at least) and desperate to understand what's going on at the PC side of the interface can easily get hold of the necessary tools to reverse engineer the software enough to re-write it. And re-writing one probably wouldn't take that long either. I think it's far more likely the story is "we don't want to", "the lawyers won't let us" or "we're too embarrassed to". The camera firmware may be a different case, but few people are asking for that to be open source.

But that's an argument for another time

Meahwhile, back in Gotham City, my install script appears to work and my Mint 15-built binaries work on Mint 14, as do the SPC900 and ASI cameras. I'm just finishing installing Ubuntu 12.04 to try it there and I believe I have copies of 11.04 and 10.04 that I can try it on. Unless they've already been used for pigeon-scarers I might have some of the .10 releases as well. I'm just trying to decide if I can face downloading a couple of Fedora releases on my 1Mb/s ASDL line to try. I should get my brother to download them on his fibre link and post them to me. As a man once said: "Never underestimate the bandwidth of a truckload of CDs".

James

-

1

1

-

-

Well, I'm now at the point where I think I've done enough to kick it out there and see if it works for other people. I've built a 32-bit version and I'm just installing a few different OS releases to see if it works with them and how well.

I should probably concentrate on more functionality than support for more cameras at this point, but I've been looking through all the QHY stuff I've collected. What a nightmare it is! So far I've found at least 25 different camera models, and I'm sure I'm done yet. The open source QHY SDK supports about half of them, specifically excluding the QHY5 (but including the QHY5-II models). At least some of the cameras present one USB vid/pid pair when connected and then completely change them when their firmware is uploaded, but not all appear to have uploadable firmware. There's no obvious way I can see to tell which is the latest version of the firmware, either. All very jolly.

James

-

Don't some people measure their pupil in the dark? (I assume with a touch of red light so they can see the measurement!). I definitely don't have barn owl eyes

I asked the optician to measure mine last time I had my eyes tested. Ok, it was dim in the room rather than dark, but any loss of accuracy there is probably made up for by the fact that it's being done by someone else. He reckoned about 6.5mm for me.

James

-

1

1

-

-

I think being able to see the field stop easily is a comfort thing for some people. If the field of view is too large I think there's a temptation to allow one's eye to keep moving around it and then it's not so easy to just relax and look. I don't think that happens for everyone, but I'm sure it does for some.

James

-

Nearly there now. Have one little bug to deal with, a 32-bit build to do and I may well be ready to let people attempt to break it whilst I get on with implementing some of the stuff I've not done yet.

James

-

That is an unusual step for open source software...

Sadly so, yes

James

-

Well, I think we're nearly there.

I have less than half a dozen pretty trivial bugs to squash, a bit of documentation to write, a script to put together to put the files in the write places and I think I might be ready for a binary release. Could be done before the end of the week

James

-

I guess the Windows interface may be in better shape than the Linux one. Looking at the output from the ASI120MM I'm inclined to think that unless its heavily cooled those extra few bits aren't worth a great deal over the 8-bit image. There's an enormous amount of noise there. Ok, so sitting on my desk running at 35C probably isn't the most kind of tests, but even so...

James

-

Thanks Chris. Reassuring to know that you've hit the same problem

Sounds like I should perhaps leave things as they are at worry about them another time. 16-bit mono performance seems dreadful with the ASI120MM. I suspect there may be much bit-twiddling going on in the interface library.

Sounds like I should perhaps leave things as they are at worry about them another time. 16-bit mono performance seems dreadful with the ASI120MM. I suspect there may be much bit-twiddling going on in the interface library.Your reference to a vertical flip reminds me that I've not done that for 16-bit mono yet. I'm half-tempted to implement it only for the preview image. It's not like it really matters which way up up (or left-to-right) the saved image is.

James

-

I think I'm just about there with the mono 16-bit support now. I still have to do the histogram and deal with saving the data. Actually, I have ffmpeg writing an AVI file which is claimed to be 16-bit greyscale raw video, but when I try to play it back the output says the frame size is 640 x -480

Clearly there's a bug somewhere, but at the moment I'm not sure where. I've checked everywhere I do anything that makes the frame size accessible and it's always showing correct.

Clearly there's a bug somewhere, but at the moment I'm not sure where. I've checked everywhere I do anything that makes the frame size accessible and it's always showing correct.Windows Media Player correctly identifies the frame size but decides it is a colour file, as does Registax. AS!2 doesn't like it at all.

So at the moment it's anyone's guess

James

-

Well, I thought it would be relatively straightforward, but adding support for 16-bit mono is getting quite hairy

I have a list of "things to do before making a binary alpha release". For some reason 16-bit mono support is on it. I don't know why I have it there rather than in the list of "things to do before making a source release" or "enhancements". When I write software for paying customers I often end up with a list of "things that must be done before I can claim requirement X is met" and whilst I might add to that list, I rarely remove things from it. it's a surprisingly hard mindset to get out of

James

-

Thank you, Jake

I guess progress is a reflection of just how many cloudy nights there have been in the last few weeks, too

I guess progress is a reflection of just how many cloudy nights there have been in the last few weeks, too

I've been quite surprised by how little performance is impacted by some of the image processing, I have to say. Where I've been doing BGR->RGB conversion, image flipping or histogram creation it's made very little impact to the frame rates possible. I agree that the data rate from the camera and storage of data on disk are going to be the real limiting factors. There's always the possibility of using ramfs on Linux I guess, though now single capture runs can get into gigabytes of data you'd need a lot of RAM just to be able to do a single run.

There are so many different debayering algorithms now that it's hard to work out what's worth trying and what isn't. The simpler ones seem to go a good enough job for alignment and focusing, but the more complex ones which often take multiple passes (up to half a dozen in some I've seen) over each colour appear to produce far better results. If I've understood correctly there even appear to be some that attempt to account for the fact that the CFA doesn't give a hard cut-off in the frequency response, so "near green" reds and blues actually get a response in the green pixels and vice-versa. From memory I believe Nebulosity (could be one of the other similar packages though) offers a choice of something like ten different debayering methods! Obviously it's necessary to debayer for viewing on-screen at capture time and probably for focus scoring, auto-cropping and so on, but this is why I'm thinking it probably makes sense to store the raw data on disk and handle the final debayering in processing. Or at least have the option to do so.

On the other hand one might argue that if you're that worried about the quality of your debayered output you probably need to be getting a mono camera and filters. But on the third hand, sometimes being restricted to a colour camera is the best life offers and the lack of availability or affordability of a mono camera/filters/filter wheel etc. shouldn't be a barrier to making the best of the data that is available to you.

James

-

Whilst implementing a few other things this evening I've been pondering on raw (undebayered) colour images.

It looks like there are dozens of debayering algorithms, some of which are very good but computationally quite expensive. I'm thinking that it would make good sense where raw data is available from the camera to use a quick debayering algorithm for the preview to keep the frame rate up (bilinear, perhaps) and store the raw data on disk for later processing using any number of algorithms that aren't really feasible in "real time". I'm even given to wondering if there may not be some method for "scoring" the debayered output such that one file could be automagically processed using a number of different algorithms and those that score badly discarded without human intervention, but that's for another time...

Anyhow... Back to the plot.

I can't find any recognised pixel format definition for raw video frames, nor a codec that directly handles them. Is anyone else aware of one, or shall I just shove them in an uncompressed raw video stream and assume the user will sort the details out later?

James

-

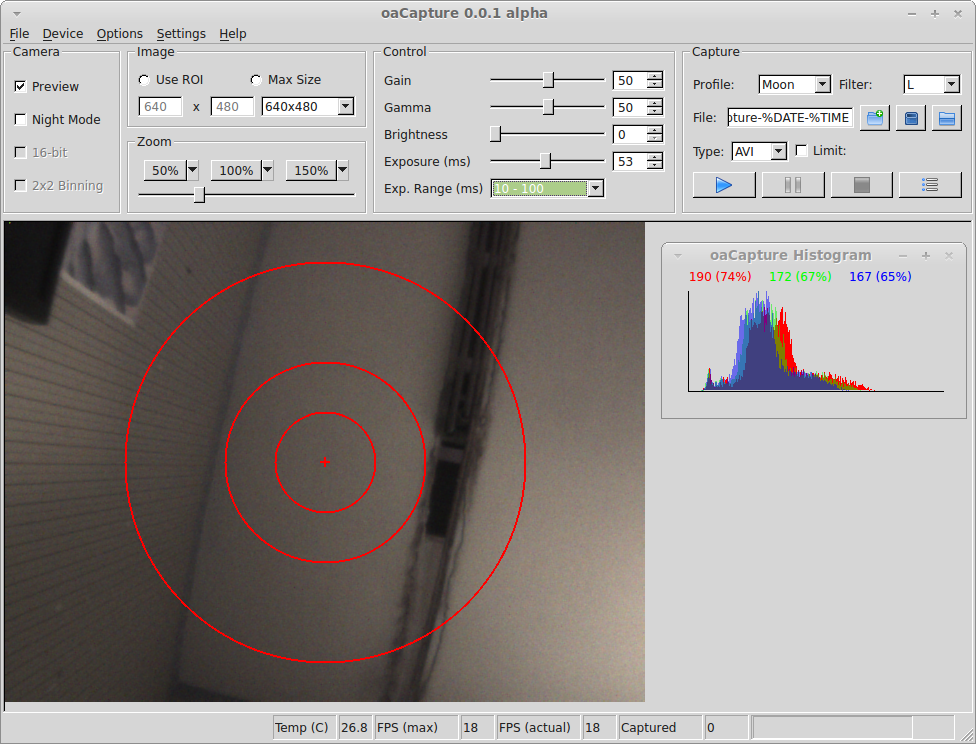

RGB histogram and reticle done. I think I might have to done the green down in the histogram. It's a bit, err, vibrant...

Later I'll add some options for a set of tram-lines or something similar.

James

-

1

1

-

-

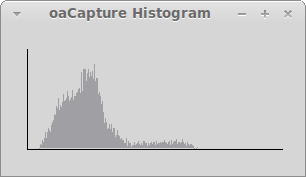

Well, I'm getting there with the histogram:

Now I want to add some text to display the maximum pixel value and percentage of maximum, and then to do the same for RGB images (this one is from a greyscale capture) to display individual red, green and blue histograms on the same graph.

James

-

3

3

-

Capture software for Linux

in Discussions - Software

Posted

No wonder I have bugs in my software with that sort of woolly writing. I do of course mean "(I've been thinking about another alpha release) this evening", not "I've been thinking about (another alpha release this evening)"

James