-

Posts

13,029 -

Joined

-

Last visited

-

Days Won

11

Content Type

Profiles

Forums

Gallery

Events

Blogs

Posts posted by vlaiv

-

-

14 minutes ago, Anthonyexmouth said:

Ill check that as soon as I get home.

Did you see the large motes in the flat? What could I be doing wrong that would make them still show in the stacked image?

I carried out the new set of calibration at night to make sure there was no light leak, put the 2 covers over the pier too in case neighbours turned any lights on.

Under or over correction happens when there is some sort of other signal than light in flats and your lights (dark not removed properly, light leak, or some manipulation with dark - like dark optimization).

Under correction is when dust shadows are still seen as being darker. Over correction is when dust shadows and vignetting becomes brighter than it should be.

Under correction happens when "flat is stronger" or "light is weaker".

It is very hard to get weaker light, but it can happen. For example - darks taken when camera was hotter, will create darks with higher value (more dark current buildup due to higher temperature) and you subtract those higher darks from lights - you end up with lower value lights (subtract more than you should - you end up with less).

Other thing that can happen is stronger flats (higher values than needed in flats) - this happens if one uses bias instead of flat darks for example as bias don't contain dark current signal that is present in flat darks hence bias is lower in value and again - you subtract those so it will take away less leaving higher value.

Dark optimization will do that if you don't use bias and have stable bias files (mostly with CCDs and DSLRs - it looks like CMOS astronomy cameras have issues with bias). Dark optimization is multiplying dark to get it to proper value - but if you don't remove bias from darks - you'll multiply bias as well - and that is bad since bias does not depend on either temperature nor on exposure length - and you assume it does and try to compensate for any of those two. This again makes more or less of bias signal and messes up flat calibration as a consequence.

-

Just now, Anthonyexmouth said:

I was hoping I'd avoided the apt problem by not using the flats aid and just eye balling it and shooting manually. The stack is bigger because I drizzled in DSS to see if the same thing happened. Are you saying I should maybe use a different capture software to get the calibration frames?

If you are already using manual exposure for both flats and use the exact same exposure length and settings for flats and your darks are good (shot at exact same exposure/settings as your lights) - you should be ok calibration wise.

What is left to do is check if you have any light leaks, and make sure you turn of dark optimization in DSS.

Don't use drizzle - there is no point.

If you are using latest version of DSS, make sure that:

a) Dark optimization is turned off (it is turned on by default I believe in latest version)

b) Dark multiplication factor is not applied

Both settings are under Dark

-

3 minutes ago, PeterCPC said:

Let's not forget Sharpcap for capture.

Peter

I left it out on purpose. It is very good software for planetary and live stacking work, but not so good for DSO imaging, in my opinion.

It is not the fault of software, but rather consequence of the fact it is primarily aimed at planetary. It uses native drivers first - you need to specifically select ASCOM driver to do capture with.

Native drivers operate a bit differently - they are aimed at fast readout and because of that - subs are not as stable as with ASCOM drivers (or so I've found). This means that calibration is more likely to fail when using native drivers vs ASCOM drivers that are specifically aimed at long exposure.

-

3 minutes ago, William Productions said:

Are there any solar filters I can use to see sunspots?

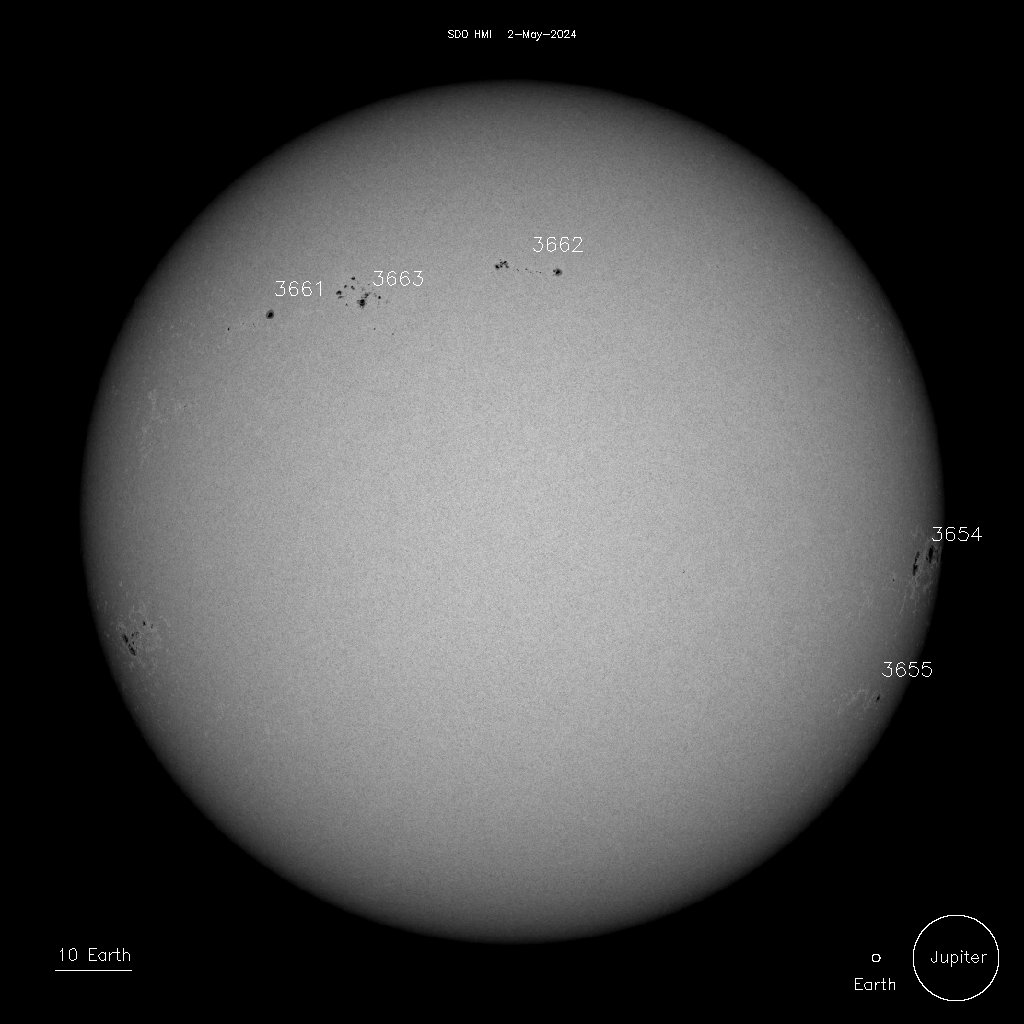

You can use Baader solar foil to make full aperture white light filter, but at the moment, Sun is at solar minimum (or just finished it) and there are no sun spots to observe. You will need to wait a bit for solar activity to pick up and first sun spots to start appearing before you can look at them.

this image shows current view in white light:

-

1

1

-

-

You are doing solar work. You have very bright target regardless of any filter components that might dim it a bit.

Your primary concern should be - exposure length, because solar work is again lucky planetary type imaging. With planetary / lucky type imaging you want to freeze the seeing. Exposure length depends on something called coherence length and coherence time.

For most part - that means exposure lengths of about 5-6ms when doing planetary. Only in very best seeing you can go with 20-30ms exposures, but that is rather rare. Planetary imagers struggle to get enough signal in such short exposure, but you should have no issues. You already have low enough exposure length with 2ms to beat the seeing.

Next important thing with planetary type imaging is read noise. If read noise is zero - there is no difference in single exposure of one minute or thousands of short exposures all totaling to one minute (difference in SNR) - as all other components are time dependent and add up the same. Only read noise is per sub - and more subs you have more times you add read noise.

For this reason, best planetary cameras have fast readout and low read noise.

Gain setting changes both full well depth and read noise. Unless you are saturating in your exposure - you should not worry about full depth / dynamic range in planetary imaging In fact, you don't have to worry about it - period. If you saturate - just reduce exposure length.

This is very important graph for you - it shows that read noise is the lowest at about Gain 300. Dynamic range is still high enough at 8bits (for planetary imaging as you will be stacking hundreds or thousands of subs).

In the end, let me add that you should do proper calibration of your recordings. This means darks, flats and flat darks. Go crazy with number of subs there - hundreds of them (provided that you have strong enough flat panel and your flats are short - even one second flat will take only 15 or so minutes to record both flat sequence and flat dark sequence in hundreds of subs).

Use PIPP to calibrate your ser with all of those (it supports loading of ser files to do calibration) and export calibrated ser without much processing ( and then continue to AS!3).

-

1

1

-

-

You need planetarium app and telescope control - Stellarium

You need capture application - if you have dedicated astro camera here are some choices: SGP Lite, Nina, APT, SIPS

I switched to SGP Lite after using SIPS for some time and eventually went for SGPro (payed version). For DSLR I think most people opt for APT, but there is ASCOM driver for Canon cameras, so in principle you could use any above application with Canon DSLR as well (not sure about other DSLRs like Sony and Nikon and such - if they have ASCOM drivers available).

You will need guiding app - PHD2

You will need stacking/calibrating application - DSS and ImageJ (with some plugins). First is only sensible option, second is a steep learning curve. I believe there are other options as well (like Siril?) but I have not used them, so can't give direct recommendation.

In the end you will need processing software - Gimp (use 2.10 and above as it has support for 32bit per pixel format.

-

I'm failing to download Autosave.tif for some reason, but I wonder

How come that your single flat sub is 22MB while stacked version is 400MB - that is x20 increase in size. Maybe you made mosaic or something?

In any case - from fits header on flat, I see that you are using APT - and it seems that people have issues with APT and automatic calibration frames. I'm not sure why is that, I personally don't use APT so can't tell what might go wrong.

Don't use any automated process or wizard - shoot all your subs on manual settings. Take dark, flats and flat darks (plenty of each) and of course pay attention that darks are matching lights in all settings and that flat darks are matching flats in all settings.

-

1 hour ago, Paul2019 said:

Wow, thanks for the in depth reply. So my understanding is that the noise is going to limit my image and there will become a point where noise is too great? So does that mean with each additional light the noise will increase? or have I got my wires crossed somewhere? Its all so confusing

Here is very bare explanation of all key points:

Noise is random unwanted signal.

Next to that there is unwanted non random signal.

Finally there is signal that we want - target signal.

There are 4 main types of noise (random components) in AP and associated signal (either wanted or unwanted):

- read noise - it is Gaussian type noise. You have it each time you read out a sub and it is always same intensity/magnitude (that is why we say for example that read noise is 3.5e for particular camera). There is also "read signal" - or more often called bias signal. This is signal, it is unwanted signal (but not random) and it is removed in calibration. You can inspect that signal by taking set of bias subs and creating master bias

- thermal noise - it is Poisson type of noise and it is related to dark current signal. Their relationship is: intensity of thermal noise is equal to square root of intensity of dark current signal. Dark signal builds up linearly with time (for given temperature, if we change temperature things get messy - this is why it is important to shoot darks at same temperature as lights). Dark signal is removed in calibration.

- light pollution noise - it is Poisson type of noise and it is related to sky signal. Again their relationship is: LP noise is square root of intensity of Sky signal (same as with dark current and thermal noise). Sky signal increases linearly with time (same as dark current signal). Sky background is removed in processing (either setting black point or using advanced things like background removal if one has gradients in their images).

- Target noise - it is again Poisson type noise and it is related to target signal. Again - target noise is square root of target signal (like previous two), Again target signal increases linearly with time (as previous two).

Target signal is good signal and we don't want to remove it but rather capture it.

Finally - noise adds in "quadrature" - or linearly independent vectors - or square root of sum of squares.

One more thing - "stacking" (adding or averaging) of noise will produce value that is square root of number of subs larger or smaller (depending on whether we are adding or averaging) than individual noise level (this can be shown by above rule on noise addition)

If you put all of this together:

All signal except bias signal (not important as is removed in calibration) raises linearly with time - so does our target signal.

All noise except read noise is square root of one of above signals (thermal, sky or target) - so it raises like square root (less than linear).

Read noise is per sub - but when added it raises like square root of number of subs (rule about stacking) - while signal adds linearly.

Overall - signal will always "beat" noise given enough time - regardless how small signal is and how large noise is - because signal raises linearly and noise raises like square root

This is graph of square root function and I've added linear function (straight line).

At the beginning - root can be larger than linear value but there is a point where linear shoots off and beats square root and can be arbitrarily larger. This means that we can have any SNR we want - if we put in enough exposure time (number of subs) for any given signal strength and noise levels.

-

2

2

-

-

29 minutes ago, Paul2019 said:

Obviously I am aware that longer subs are better than shorter ones and that a larger number of shorter ones wont provide the same image as the same amount of exposure time in larger subs.

Do you know why is that? It is solely down to read noise. If read noise was 0 - then there would be no difference. 100 subs each 1s long would give exactly the same image as 1 sub 100 seconds long (as long as total imaging time is the same).

In fact, there is very small difference between two approaches when read noise is non zero if read noise is not dominant noise source. If you have heat noise (dark current noise) or light pollution noise that swamps read noise - you'll be hard pressed to see the difference between the two.

All other noise sources depend on time, and only read noise is per exposure - for this reason there is point of diminishing returns (which depends on both read noise and other noise sources) - after which there simply are no gains in going longer exposure. If your read noise is low and you shoot in light pollution on a hot summers night (and your sensor on camera gets hotter than usual) - there is a chance that you won't gain anything by going longer than those 8 seconds.

On the other hand, if you use cooled camera, narrow band filters and you are shooting in dark location, high resolution image - then it's worth making single sub be half an hour or more.

31 minutes ago, Paul2019 said:But is there a limit to the number of subs I can stack? I.e will 200 subs be too many? How about 2000? Where will I hit the limit of what is practical?

In principle there is no limit to number of frames you can stack. In practice, yes there is limit in terms of memory / size of each sub, and numeric precision of data format used. With 32bit floating point format and modern day cameras you'll run out of precision after stacking about couple of thousand of subs. 32bit integer format is better in this respect as you would be able to stack millions of subs before you run out of precision, but 32bit integer lacks dynamic range of 32bit float precision format.

In any case, as long as you stay in hundreds of subs (or maybe one to two thousand) and use 32bit precision (which ever version) - you will be fine.

Another important point to add to this is - you can use arbitrarily short subs and stack them and you will get result, but problem with very short subs is that you won't have alignment stars visible in your subs (regardless of that - if you had a means of aligning the subs - they would still produce image even if any single sub has no photons captured in particular pixel).

40 minutes ago, Paul2019 said:I am planning on collecting some data around Orion and although the nebula is visible in 8secs, I want to try and get a bit more from it.

I am awaiting this whole mess to be over so I can put and order in for a HEQ5 but for now it will have to do. But heres 3 minutes unprocessed from the other night,

Very important aspect of astrophotograpy is processing. Try processing that image of yours and you will be surprised how much difference 3 minutes makes compared to 8 seconds.

Even just a slight tweak on that 8 bit version you posted changes image quite a bit:

-

Yep, know what you mean:

and full moon on top of it ...

-

2

2

-

-

9 hours ago, Tjk01 said:

So I gave that a try and while it did stack the pictures the result was not very likeable I'll try to share my data files. There's over a hundred light files though the 7 were just a test.

Do share data as it could contain much more than meets the eye. Processing of stacked images is skill in itself.

Please make sure to export as 32bit (fits or tiff) as 16bit format can lead to some data loss if you stacked many subs.

-

Just now, fairyhippopo said:

my main purpose is planetary, so i guess fov is not an issue for me..

Then get a driven mount. Even simple clock drive on a basic mount is going to be beneficial.

I mostly observe with 8" dob, but got AzGti with ST102 (primary purpose of purchase was something else, but with strong desire to keep the scope close to me for quick grab n go from balcony). It really makes a difference not having to nudge scope every so often - focus shifts solely on teasing out the details and not messing with mount and getting view back in center of EP ever dozen of seconds or so.

-

+1 for Skymax 102.

It's recent addition so I have not had a chance to use it much, but so far - I like it very much.

When I was thinking of getting my first Maksutov scope - there were a few contenders 90mm, 102mm and 127mm. I'm glad I've chosen 102mm.

In essence very similar focal length to 90mm (1250mm vs 1300mm) but a bit more aperture - good for both light grasp and for resolving things. Moon is very sharp with this scope. I was not much of Lunar observer but this scope is now my grab&go for quick lunar.

Many people will say that Maks have very narrow field of view, and while this might be true for larger instruments with longer focal length, I don't think it is necessarily true for 90/102mm models. I also have 8" dob. That instrument has 1200mm of focal length and is considered one of the best amateur visual instruments and often recommended to beginners. Almost the same focal length as 90/102mm maks (only 50mm difference between each of them). I never felt "boxed in" by my 8" dob, and yes, if you want to observe whole Andromeda with it - it's not really going to work, even if you put forward serious cash (and get ultra wide 2" eyepiece) - you'll still miss a bit of FOV.

But if person wants wide field views - its just much more cost effective to get ST102 / ST120 type scope and enjoy truly wide fields at 500-600mm of FL.

In any case - good scopes, if you can, get 102 - just a bit larger and a bit more fl but more aperture for a bit more money.

-

1

1

-

-

Hi and welcome to SGL.

DSS should stack your image.

It is over sampled and stars are fairly large when you look at them at 100% zoom level. It might be what is causing DSS.

One of the simplest ways to deal with that would be to tell DSS to use super pixel mode. Maybe try that first?

Alternatively you could upload your subs (if there is only 7 of them) to some file sharing service and people here can have a go at stacking and then tell you what settings worked for sure.

-

4 minutes ago, dodgerroger said:

Yeah that does make sense, good job I have pixinsight then. Thanks for the info. So if I can summarise in my laymen terms shoot 1x1 in APT and then follow your earlier explanation in pixinsight to get 2x2👍. All good fun eh thanks again.

Indeed. It costs you nothing to try. If you select bin 2x2 in APT - it is irreversible action - you can't split binned subs to unbinned. However, you can shoot at 1x1 and then if you choose to do so - bin to x2 - it won't be any different compared to capturing it originally as binned x2.

Only difference when binning in software is that you have choice and you can compare what you prefer - make stack with original 1x1 or bin each sub after calibration and stack that and process both. Some people don't like to bin and find it easier to process over sampled image because they never look at image in full zoom. Having larger pixel count lets them apply more sharpening and image looks better / sharper on screen size (but it looks much worse with fat stars when you zoom in to 1:1 to see the details).

-

53 minutes ago, dodgerroger said:

I am using pixinsight at the minute, something else to get my head around. I thought the 183 was a good match for a short focal length scope

It is, but there are short focal length scopes an short focal length scopes.

It matches well with focal lengths of 320-350mm. Those are 70-80mm scopes in F/4-F/5 flavors. As soon as you get over 400mm you are starting to sample at close to 1"/px. That is about as good as amateur setups are able to do with high end mounts and larger scopes (8"+).

~ 80mm -> sampling rate of about 2"/px

~ 120-150mm -> sampling rate of about 1.5"/px

~ 8"+ -> 1 - 1.2"/px

would sort of rule of the thumb in sampling rates. You also need a mount that is capable of half your sampling rate in RMS or less. So for 2"/px you need something like 1" RMS mount (Heq5 does that fairly easily). 1.5"/px - you need 0.75" RMS or less - EQ6/HEQ5/CEM60 all do it if properly tuned and with some experience with guiding. 1-1.2"/px - you need 0.5 RMS or less. We now hit premium mount territory here.

All is not lost, with the scope you have and that sensor, you can easily bin x2 to get 2.4"/px for very good sampling rate.

In fact - you can check ideal sampling rate for your image - take any stacked image (or a single sub) and measure average FWHM in that image - convert value to arc seconds (if in pixels, multiply with 1.18 to get arc seconds) and then divide value with 1.6 - that is optimal sampling rate for that particular image.

Let's say you have 3.2" FWMH stars - optimal sampling rate wil be 3.2" / 1.6 = 2"/px

One of advantages of software binning is the fact that you can choose to bin if it makes sense - by examining your image after you capture it. If night was particularly good and seeing stable and your mount well behaved - small FWHM - leave image unbinned if it makes sense - otherwise bin it.

-

46 minutes ago, dodgerroger said:

So out of interest, if I wanted to bin colour it wouldn’t be in APT but at what point would it be done? I’ll keep it at 1x1 for now while I get my head around it all

1.18"/px is on the edge to be over sampling. You need really good seeing / guiding / larger aperture to fully exploit high sampling rate.

What software are you using? If you are using PixInsight for example - calibrate your subs normally, and then prior to stacking - use Integer resample tool with average method (that will bin). Reduce subs that you want by x2 (for example color, although you might do both luminance and color).

Afterwards - proceed normally as if you captured particular set of subs with hardware binning.

-

What sort of dither movement does application offer? How many subs are you going to capture?

You started off in the right direction - first thing to do is to figure out what is your imaging resolution - or how big pixel is in terms of arc seconds.

Next thing is to figure out movement pattern and size of movement in order not to get to "same place" twice (or at least to reduce probability of that significantly). Third thing of course is that you don't want to crop off too much of your image due to excessive dither movement - remember each time you move a little - you cause edges of that sub to go "out of stack" (or rather intersection between subs shrinks).

Issue with SAM is that it guides in one axis only - RA. This means that any dithers you make will be along one axis which greatly reduces "maneuvering space".

I think that best strategy would be to spend some time to learn how much polar alignment error can you make deliberately before you get trailing in your subs due to poor polar alignment. Here is a good tool to help you with that:

http://celestialwonders.com/tools/driftRateCalc.html

Enter error in polar alignment and observe drift rate. For example - you are imaging at ~18"/px. If your drift rate is 0.1"/second - you can image for 3 minutes before drift makes trail one pixel long.

Why do I mention this? Because if you make polar alignment error such that it trails almost a pixel in single exposure - every exposure will move by one pixel and you will get "dither" in DEC as well. This is sort of natural dither. This makes it almost impossible to get to subs at same location even if RA moves back and forward the same amount (move Ra +5 px, move Dec +1px, move Ra - 5px, move Dec +1px - Ra is at exactly the same place it started but Dec also moved so sub in total moved, but if there was no movement in DEC - subs would align perfectly as +5 -5 = 0).

Btw - you are right - set RA dither around 10-15px (calculated in arc minutes if app requires it like that). I'm guessing here that dither strategy is random and not pattern.

-

1

1

-

-

16 minutes ago, dodgerroger said:

Hi there folks, I have just taken a big leap from dslr to mono cmos,A hyper cam 183.

i had the filter and camera all set up on my desk I thought I’d try a quick image plan in APT just to see how it all works. Anyway I set my RGB filters to 2x2 bin as people seem to use that for colour and 1x1 for luminance. Set it all going and APT came up with “ 2x2 binning not supported changing to 1x1??

I am I missing something??Just shoot at 1x1 setting in your capture application.

Cmos sensors bin in software, and if you need to bin depending on your sampling rate - I can tell you how to do it.

9 minutes ago, ollypenrice said:I gather the advantage from binning is not what it is with CCD

That sort of depends. Only difference between CCD hardware and any sensor software binning is read noise. You keep single read noise with CCD while you get double read noise for software bin (2x2 bin - x2 read noise, 3x3 bin - x3 read noise, .... etc).

In fact, depending on how you do software binning - it has other advantages, but let's not get into that now.

Only thing read noise is relevant to - is exposure length, and only in very dark skies without LP and when comparing high read noise camera, you can't "neutralize" read noise by choosing suitable exposure length (as in such conditions - you would need hour long exposures to "neutralize" read noise).

Plan on binning in software? Increase sub duration slightly (so that "diluted" sky noise still beats read noise on single pixel prior to binning and you are fine).

-

3 minutes ago, MarkAR said:

Good idea.

Stellarium taken at 12.22+22secs.

All looks a bit different.

Not sure if you did it right.

Turn on EQ grid and center that on the screen and look where Polaris is with respect to "12 o'clock" - like this:

That is position for my location and current time. I was in alt az mode (not eq).

Be also aware that application might show Polaris position as seen thru pole finder scope - it is small refractor in see thru configuration - it will flip both vertical and horizontal axis - so above image in pole finder will be with polaris at about 5:45pm (in clock/hour positions).

-

Have you tried comparing those readings to Stellarium and seeing which two of the three match (or if they are all different)?

-

1. I'm confused about this point. If anything I've found my dob to be a bit too stiff rather than wobbly. You can increase tension on both alt and az bearings - that will make it stiffer to move. Not sure about wobble though - my 200p feels like a solid piece of kit and it does no budge easily.

2. Here you can do simple upgrade.

I did it on mine and I'm super happy with it. It's a bit long wait at the moment (website says 88 days) but maybe it can be found elsewhere in stock?

3. In order to have tracking - most cost effective solution is either to purchase or DIY equatorial platform.

In general re changing the telescope vs upgrading:

200p is a lot of telescope - I mean aperture. Dobsonian mount is quite manageable for that much aperture. If you want to keep 8" of aperture and have that much comfort on EQ or tracking AltAZ mount - it's going to cost you quite a bit of money (compared to this dob of course - if something is expensive to someone is relative thing).

Besides increased cost - there is issue of manageability of scope. Only folded telescope design like SCT will be more manageable / comfortable on EQ mount in 8" size compared to dobsonian mount. If you want newtonian telescope in that size and still want to maintain some comfort - look into AZ mount rather than EQ mount.

Bottom line - if you want to keep things simple and don't want to spend too much money - upgrade focuser with above addition, get EQ platform and forget about imaging.

On the other hand - if you have enough money, then just hold onto the dob and get another scope that is both easily mounted and use don EQ mount and can be used later for astrophotography.

-

12 minutes ago, jambouk said:

vlaiv, in the absence of a guiding set up, or the ability to tune the mount or undertake a belt mod, is it worth the original poster doing a 10 minute sub with low ISO and maybe a filter to get a longer run of the trailing to see it in more detail?

You don't have to do long exposure to see what is happening, if you already have sequence of short exposures. All it takes is to stack images without alignment. Maximum stacking method is better for reading star trails than average because average "weakens" star signature (averages with background when star moves between subs).

22 minutes ago, jambouk said:Is periodic error correction that useful if you have a non-permanent set up and are having to do a fresh polar alignment each night?

It can be very useful, but it really depends on what is the main mechanical issue of the mount. Periodic error correction just reduces maximum P2P error and removes frequency components of mount periodic error that are harmonics of worm period. It can't remove other frequency components - like short period error of gear system, or very long period error of RA shaft. Later is not important as it is changing very slowly - period is usually 24h - or whole RA circle, but former can be real problem. It is usually much bigger problem if you guide as it is also harder to guide out fast changing error. Belt mod helps there, as it smooths out reduction between motor and worm.

Only issue with periodic error correction that I've found so far is fact that you must park your mount each time (when using EQMod and its VSPEC - variable speed periodic error correction, not sure if hand controller even has PEC and if it does - how it works and if this applies to it as well) or risk loosing sync between PEC and mount. Heq5 does not have encoders and position is tracked via stepper ticks - each time you power off it just resets counters and if you are not parked to same position you started when you recorded PEC information - sync is lost - you need to record pec again.

I had to do it couple of times so far - once due to power failure - mount stopped and it was not parked. Once because of laptop upgrade (reinstall of drivers and PEC file lost on old laptop).

If done with any care - drift due to polar alignment is very small. For example, using this calculator: http://celestialwonders.com/tools/driftRateCalc.html

You can see that for 6' - or arc minutes of error in polar alignment (that is 1/10th of a degree or about 1mm at arms length - 1/5th of full moon), drift rate is about 1.6"/minute - less than a pixel of drift when imaging at 2.5"/px and using 90s exposure.

But we don't have to assume it is either PA or PE error - we can see from the sub. If trails are in RA direction - it is due to periodic error, if they are in DEC direction - it is polar alignment error.

For the end, I'd like to show you an animated gif that I once made.

Guess which way is RA and which is DEC?

-

Polar alignment is source of such errors only if PA error is very large - in normal cases, you should not be able to see that much drift in minute and a half.

What you will see, however is periodic error. Heq5 is known to have as much as 35" peak to peak periodic error. It has worm period of 638s so it can drift as much as 7-8" per minute and sometimes even more if periodic error is not smooth.

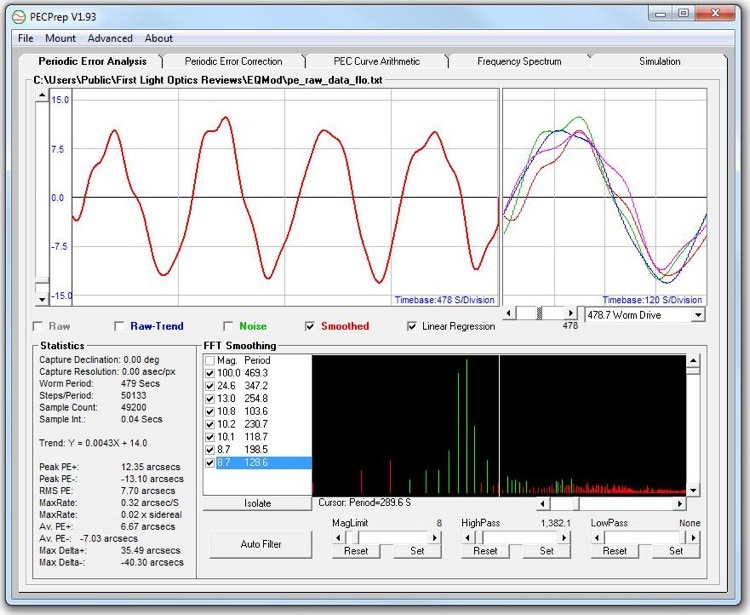

Take for example this image:

It is pecprep view of periodic error of EQ6 mount (main period being 479s). Main points to see are stats on left bottom - Peak + is 12.35, Peak - is 13.10 - that gives 25 P2P error. Also important parameter is MaxRate which is 0.32"/px.

If you have such rate of 0.32"/px and you have exposure of 90s - your drift can be up to 0.32 x 90 = 28.8 arc seconds.

You are imaging at 2-3"/px. This means trails for that much drift would be 10-15 pixels long. This is worst case scenario, and in practice drift rate is never at maximum for long (it resembles sine wave) and in your image, trails are about 4-5px long?

What can you do about these trails? Two things:

- periodic error correction

- guiding.

I think that you need computer control of your mount + EQMod for both, but I'm not 100% sure. PEC (periodic error correction) could be available via hand controller as well, but like I said, not sure.

I have Heq5 also and have done both of above and yes it helps. There is one more thing that can help but it won't eliminate problem 100% - doing a belt mod.

Belt mod significantly reduces P2P error and hence max drift rate. There could still be some trailing but if it is small enough - it won't be seen (about a pixel or two at the most).

Hope this helps.

Stacking help

in Imaging - Image Processing, Help and Techniques

Posted

Take mono flats.