-

Posts

1,700 -

Joined

-

Last visited

-

Days Won

1

Content Type

Profiles

Forums

Gallery

Events

Blogs

Posts posted by don4l

-

-

What an enjoyable thread.

Hoping you get plenty more clear skies! The images are gorgeous, and the island looks lovely.

-

1

1

-

-

On 27/12/2019 at 10:11, ollypenrice said:

That background sky is way, way better on my monitor. Nice one. I might just give the green a tiny lift to kill the slight magenta caste of the image but that is a great tweak you did.

Olly

Thanks Olly.

Lifting the green didn't turn out to be as simple as I thought. Parts of the image turned an obvious green when I gave it enough to remove the magenta. To produce this version, I lifted the green a bit on the whole image, and then duplicated it. I applied a layer mask (greyscale of image) and adjusted the green up a bit more in that layer. I think that I can still see some magenta, but I am not good with colour, so I wouldn't bet on it.

I've cropped it a bit more to save bandwidth.

-

2

2

-

-

12 hours ago, ollypenrice said:

The image is nice.

I think of LRGB this way: a colour filter blocks about 2/3 of the visible spectrum light, a bit less maybe, but about that. An L filter passes all of it. So it takes an hour per colour filter (3 hours) to get as much light as you get in 1 hour of luminance.

In practice I find I don't really need 3 hrs of RGB to match 1 hr of L, I really need 4 hrs or so. The theorists disagree but I'm not a theorist, I just measure what I actually get.

Then we find that we don't need as much colour information as luminance. The RGB can be processed for low noise (essentially it can be blurred) and high saturation. The strong L layer can be processed for sharpness (the opposite of blurring) and depth of faint signal. As a general rule we are not expecting much colour from the faintest signal anyway. When combined, the weaker RGB signal will work well with the stronger L signal.

I think that in your M33 you have a lot of colour noise in your background sky which it would be very easy indeed to remove since there is little real colour information in the background anyway. You could lose the colour 'detail' in the background (which is an artifact anyway) without smoothing the luminance so the sky would not look artificially noise reduced.

Keep an L-RGB mentality throughout the processing!

Olly

I *think* that I've managed to do as you have suggested. I used your method of lifting the left hand side of the curve in "curves adjustment" on the RGB data. I don't know if I have lifted it enough.

-

2

2

-

-

18 minutes ago, ollypenrice said:

The image is nice.

I think of LRGB this way: a colour filter blocks about 2/3 of the visible spectrum light, a bit less maybe, but about that. An L filter passes all of it. So it takes an hour per colour filter (3 hours) to get as much light as you get in 1 hour of luminance.

In practice I find I don't really need 3 hrs of RGB to match 1 hr of L, I really need 4 hrs or so. The theorists disagree but I'm not a theorist, I just measure what I actually get.

Then we find that we don't need as much colour information as luminance. The RGB can be processed for low noise (essentially it can be blurred) and high saturation. The strong L layer can be processed for sharpness (the opposite of blurring) and depth of faint signal. As a general rule we are not expecting much colour from the faintest signal anyway. When combined, the weaker RGB signal will work well with the stronger L signal.

I think that in your M33 you have a lot of colour noise in your background sky which it would be very easy indeed to remove since there is little real colour information in the background anyway. You could lose the colour 'detail' in the background (which is an artifact anyway) without smoothing the luminance so the sky would not look artificially noise reduced.

Keep an L-RGB mentality throughout the processing!

Olly

Thanks Olly.

I'll have a go at reducing the background noise in the colour. I've tried to save my work as I went along, so I should be able to go back.

-

CCDCiel has an option to display the image frame as part of its platesolving routine. I suspect that other capture software has the same feature.

I platesolve a reference image from the first night's session, and then I have it as a background image in my Cartes du Ciel. The next night, I platesolve and display the image frame in CdC. I just rotate the camera until the frame lines up with the background image. It usually takes no more that three attempts to be aligned to less than a degree. The beauty is that you don't need to remember any rotation angles or numbers. It takes about 2 minutes to be precisely aligned and rotated using 2 second exposures for the platesolving.

Here is a screenshot in which I would need to move the camera a bit to the left and rotate it a bit clockwise when I stand behind it.

-

2

2

-

-

This is my first proper LRGB image, and I am very pleased with it. I've always had a brain block in understanding how an "L" could bring quality to a low res RGB image. A few weeks ago, I read that in fact the RGB just colourised the black and white "L" image. This may be obvious to some, but I had never thought of it that way before.

I ran a test in the Gimp. I put a mono layer of some old "L" over a colour layer with the the mode set to "luminance". Then I reversed the layers, and changed the blend mode to "HSL Colour". The results were identical! The only difference was that I can get my head around colourising a mono image.

50m L, 25m R, 40m G, 55mB and 40m Ha. (RGB 2x2).

Tak FSQ106, G3-16200, EQ6,

CCDCiel, PHD2, CCDStack, Gimp

Any advice would be very welcome.

-

13

13

-

-

Excellent result for 19 minutes. You are dead right to be pleased.

I like both versions, but if I had to pick one, then I would go for the second one.

-

1

1

-

-

The reports of Mag -1 are just wrong unfortunately. They appear to emanate from a bug/error at the MPC (minor planets centre) who provide the data for CdC and others.

This is a terrible pity because it is due to pass right in front of NGC2403 on the night of 23(I think) of January. I was going to book the next day as holiday so that I could stay up to photograph it. Now, it doesn't look like it will be worth doing. [sigh]

-

1

1

-

-

I agree with Carole.

Go in June. You won't be missing anything here but you can image from 10 at night until 5 in the morning, and you will get to see stuff that you cannot see from here.

-

Thanks Helen.

I've signed up. Let's hope that I learn something.

-

1

1

-

-

It's a lovely image.

I know that If I had taken it I wouldn't bother gathering any more data because I wouldn't be able to reproduce the balance of colours and contrasts if I started again.

-

1

1

-

-

4 hours ago, Davey-T said:

I just use sheets of printing paper, once you find how many are needed for a decent exposure, I try to get about 3 seconds, you can make a note for future reference.

Dave

I'd like to think that I would have figured this out before spending hours on Google, (and some money on something that might, or might not have worked). I've hung 3 sheets of paper over the panel and it works a treat.

-

1

1

-

-

On 11/12/2019 at 21:16, Benjam said:

OMG, wow, I can’t believe this is the same picture. Thank you Wim!

Hopefully the light box @don4l mentions will help me with flats.

Ben

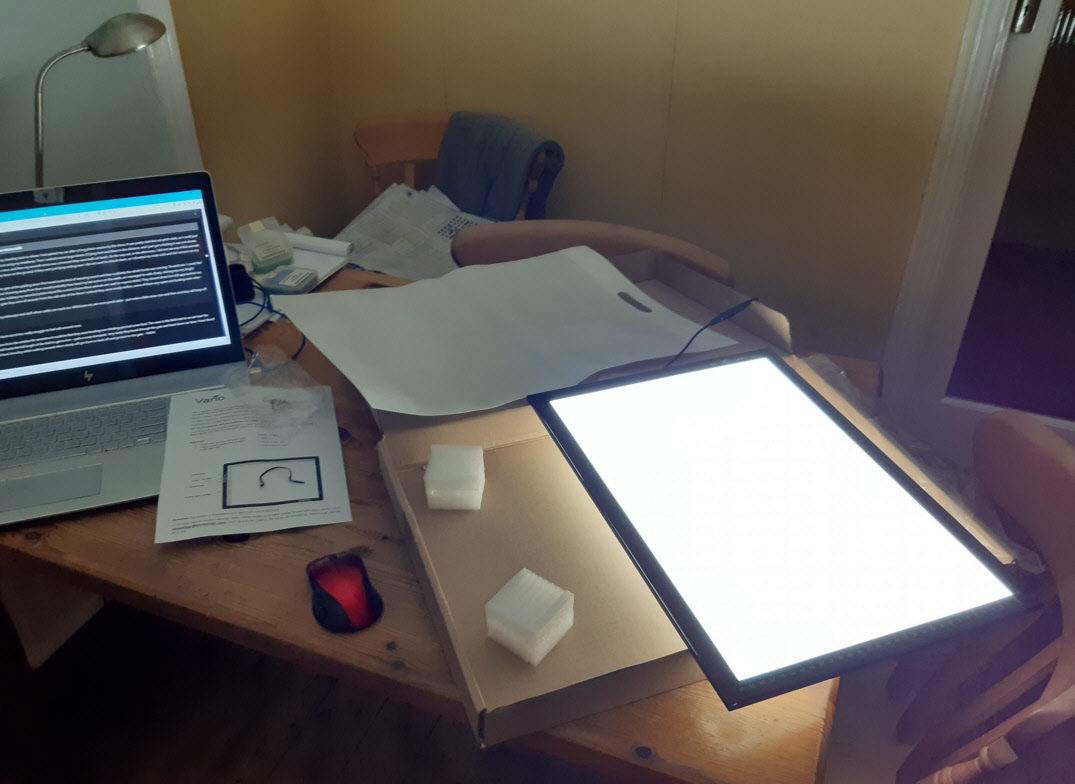

I thought that I should post this update as I tested the light box last night on my FSQ106 at F5.

I think that it will need something to reduce the light. I've seen people refer to some sort of film that can be used for this.

It works with 3nm narrowband filters. It was too bright with L, R, G and B filters. My minimum exposure is 0.2s, and the CCD was fully saturated.

I'll be very surprised if it works at F10 on my Tal200. Even if it does, I don't like to take flats at minimum exposure, so I will still need something to reduce the light.

-

1

1

-

-

That's lovely!

-

Thank you all.

I've got a much clearer picture now. I'll get back to Google and carry on looking. I'm looking for three separate bits, the Strap, the controller to vary the voltage(dimmer), and a 12V supply.

-

1

1

-

-

1 hour ago, Benjam said:

Hi everyone, will this work for taking flats? I’ve checked the dimensions and the light box linked below will be plenty big enough to fit over a 8” SCT. https://www.amazon.co.uk/dp/B01HEQAFQQ/ref=cm_sw_r_cp_tai_D4o8DbQ7GEVZS

I also practiced centring my target last night and ended up taking 50 subs.

That looks like it should be fine.

I recently bought this one :- https://www.amazon.co.uk/gp/product/B00OJ9PQWU/ref=ppx_yo_dt_b_asin_title_o00_s00?ie=UTF8&psc=1

[edit] I've just seen Wim's post, and I am fairly sure that mine produces an even illumination. It may be too bright even on the dimmest setting - I'll find out when I test it. There is a photo of mine illuminated in the "what the Postman..." thread :-

=====

I haven't used it as a light box yet, but it looks fine. It arrived promptly, was well packed and has it's own power cube.

-

1

1

-

-

I'm having problems with dew lately. A quick search suggests that dew heaters are typically powered by a "controller" with a car cigarette lighter adapter. I don't have any such power sources.

So, I have a couple of questions.

Is there anything special about these controllers? Do I need one, or would a 12v power cube directly power a dew heater belt?

I'm also a bit unsure about how effective the dew heaters are. Currently, I use a hair dryer whenever I suspect that things are misting up. I don't really like doing this because it throws the focus out, and it takes 10 mins to recover. If I get dew heaters will I be able to do away with the hair dryer completely?

Thanks

Don

-

Have you taken off the lens that comes with the ASI?

-

I'm not usually a fan of starless images, but I do like this. I can see structures that I haven't seen/noticed before. Nice.

-

1

1

-

-

That's a very nice image. I've never tried to do a decent dark nebula, and this looks like it should be my first.

-

1

1

-

-

On 06/12/2019 at 11:14, han59 said:

Your using the V-curve option to focus. This is the oldest method. I have used V-curve option in the past till dynamic was introduced. The Start focus HFD (2) default setting is 20 but for me 15 or even 10 was required to get good snr values. The Near focus HFD position (3) setting I had set at 6 or 7. It uses point 2) and 3) to find the focus at 4) So V-curve focusing only works if 3) still lies in the linear area. I think you can select for 3) a value twice the mininum so the HFD at focus.

-- Han

Thank you, Han

You've more than answered my questions. I was reluctant to change the results that came from the Calibration routine because I didn't really understand what they meant.

I'll have a proper play with Dynamic focusing next time that I am out. I had assumed that V curve was the recommended option.

-

2 hours ago, TareqPhoto said:

Those kind of images is what making us to dream about Tak FSQ scopes, i know many will see not necessary and can go with something like Esprit or WO or Stellarvue and such, but a Japanese scope has a reputation and quality, in fact all Chinese scopes and even some Europeans are compared to those big name or high quality ones such as AP and Tak and in same class, so i feel like why i go with top quality Chinese if there is something better anyway if can afford.

You don't have SII filter to add to this? Can we have SHO Hubble Palette with only Ha/OIII filters?

I have an old Astronomik SII filter which produces horrible halos. I haven't found any method of dealing with them. I'm yet to be convinced that an SII filter would represent value for money, and I'm not sure how my other half would respond if I brought the subject up now. I've wondered about simulating an SII channel for a fake SHO image. SII data usually looks like a different stretch of Ha data (to me anyway).

-

1 hour ago, celestron8g8 said:

That’s an incredible image especially center but i would love to see the out skirts of the neb to be more data or signal so to see more detail . But as i said that’s an incredible capture !

Thank you... and thanks for asking about the fainter nebulosity. There is only 2H 16m of data in this, I had assumed that any attempt to bring out any more would just show up noiseness. As it happens, it did become more noisy, but I've learned a method of controlling it (I think).

I opened up my working image in the Gimp and added a new layer with the original data. I changed the stretching so that the faint stuff was more visible. I then colourised this and added it in "Lighten" mode. At this point, it was quite noisy, so I applied a gaussian blur - which didn't seem to affect the rest of the image, but fixed the new faint stuff! Hooray!!!! I've never had any success with blurring before.

Anyway, here is my effort at bringing out the fainter stuff. I've no idea if people will think that it is better or worse than the original. (The stars will be a bit different in this - ignore them please).

-

1

1

-

-

35 minutes ago, Older Padawan said:

Ok here I go showing how very, very little I know about AP. As someone who is looking at possibly getting into astro photography can you please tell me about flats, darks and bias. How are they taken and how are they used. (Told you I don't know anything lol) Thank you in advance for the information

I'll have a go...

My comments are based on CCD type cameras. There are some differences with CMOS type sensors.

If you take an image with the lens covered ( a dark), then there will be some variations in pixel values, and also some/many hot pixels. These will appear as very bright Red, green, or blue dots in your final image. However, they tend to appear in exactly the same spot in every image, so if you know where they are, then you can subtract them, or cancel them out. The effect varies with temperature, and exposure duration. So you take a bunch of darks at the same temperature and exposure to make a master dark.

If you take an image of a uniformly illuminated white surface, then the image that you get will have imperfections caused by optical issues and by dust, dirt etc in your optical system. Processing software will use these flats to balance up your image to cancel out the imperfections.

The bias is the signal that is present when you just read the dark sensor in the shortest possible exposure. This will be in every image that you take, including your flats and your darks. So, to get a true flat that can be used with any length of exposure, then you should remove the bias before producing the final flat.

I remember that when I first read about this, it seemed awfully confusing. Focusing, tracking and simply just finding the target are all more important IMHO.

-

1

1

-

Lightbox for Flats

in Imaging - Tips, Tricks and Techniques

Posted

I never take binned flats.

My stacking software (CCDStack) happily uses unbinned flats with binned images.

Is it worth trying to use software binning?